Tensorflowのexamples.tutorials.mnistにあるinput_dataデータクラスの簡単な分析

tensorflowの冒頭で使うデータセットは最も古典的なmnistの手書き文字認識データセットで、tensorflowに直接カプセル化されているのはmnist handwritten character datasetクラスなので、input_dataのメソッドで直接呼び出せるのは便利です。 * データを読み込む、データラベルを読み込む、next_batch()でデータフィードするなどの操作はできますが、後でさらに深化させる場合、カプセル化されたデータクラスのメソッドを使わずに独自のデータセットを学習・テストしたい場合は、この時点で独自のデータセットを作成する必要があります。そこで、まずは公式の tensorflow.examples.tutorials.mnist import input_data インスピレーションを見つける

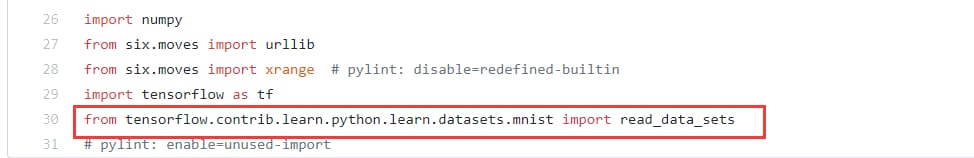

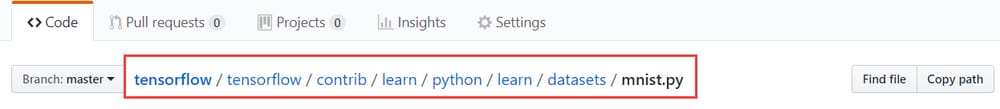

まず、tensorflowの公式GitHubで、そのimportパッケージのパスに従って、その定義を以下のサイトで見つけます。

次にコード定義を探します。

次に、mnist.pyのコードを開いて、データセットのクラスとメソッドを定義します。

そのDataSet(object)クラスとメソッドは、以下のように定義されていることがわかります。

1. DataSet(object)クラスを定義する。

class DataSet(object):

"""Container class for a dataset (deprecated).

This CLASS IS DEPRECATED. see

[contrib/learn/README.md](https://www.tensorflow.org/code/tensorflow/contrib/learn/README.md)

for general migration instructions.

"""

def __init__(self,

images,

labels,

fake_data=False,

one_hot=False,

dtype=dtypes.float32,

reshape=True,

seed=None):

"""Construct a DataSet class.

Use the one_hot (unique hot encoding) parameter only if fake_data is true.

`dtype` can be `uint8`, which keeps the input as `[0,255]`

It can also be `float32` which rescales to `[0,1]`.

The seedseed parameter provides a convenient deterministic test.

"""

seed1, seed2 = random_seed.get_seed(seed)

# If op level seed is not set, use whatever graph level seed is returned

numpy.random.seed(seed1 if seed is None else seed2)

dtype = dtypes.as_dtype(dtype).base_dtype

if dtype not in (dtypes.uint8, dtypes.float32):

raise TypeError(

'Invalid image dtype %r, expected uint8 or float32' % dtype)

if fake_data:

self._num_examples = 10000

self.one_hot = one_hot

else:

assert images.shape[0] == labels.shape[0], (

'images.shape: %s labels.shape: %s' % (images.shape, labels.shape))

self._num_examples = images.shape[0]

# Convert the input type [num examples, rows, columns, depth]

# Convert to [num examples, rows*columns] (assuming depth == 1)

if reshape:

assert images.shape[3] == 1

images = images.reshape(images.shape[0],

images.shape[1] * images.shape[2])

if dtype == dtypes.float32:

# convert data type [0, 255] -> scale [0.0, 1.0].

images = images.astype(numpy.float32)

images = numpy.multiply(images, 1.0 / 255.0)

self._images = images

self._labels = labels

self._epochs_completed = 0

self._index_in_epoch = 0

@property

def images(self):

return self._images

@property

def labels(self):

return self._labels

@property

def num_examples(self):

return self._num_examples

@property

def epochs_completed(self):

return self._epochs_completed

2. next_batchメソッドを定義します。

ポイントは、この時点でデータセットがどこから読み込まれたかを記録しておくこと、そして、反復処理ごとにデータセットが(index_in_epoch+batch_size)であることです。そして、最初のエポックをどうするか、各エポックの終わりが次のエポックの始まりに合流するのをどうするか、最初のエポックと終わり以外をどうするか、です。

def next_batch(self, batch_size, fake_data=False, shuffle=True):

"""Returns the next `batch_size` data from this dataset, based on the current dataset. """

if fake_data:

fake_image = [1] * 784

if self.one_hot:

fake_label = [1] + [0] * 9

else:

fake_label = 0

return [fake_image for _ in xrange(batch_size)], [

fake_label for _ in xrange(batch_size)

start]

start = self._index_in_epoch

# Process epoch start position

# Shuffle at the beginning to disrupt the data order

if self._epochs_completed == 0 and start == 0 and shuffle:

perm0 = numpy.range(self._num_examples)

numpy.random.shuffle(perm0)

self._images = self.images[perm0]

self._labels = self.labels[perm0]

# Execute the next iteration cycle

if start + batch_size > self._num_examples:

# Complete an iteration cycle

self._epochs_completed += 1

# Get the rest examples in this epoch

rest_num_examples = self._num_examples - start

images_rest_part = self._images[start:self._num_examples]

labels_rest_part = self._labels[start:self._num_examples]

# Shuffle is used to break up the order of the data

if shuffle:

perm = numpy.range(self._num_examples)

numpy.random.shuffle(perm)

self._images = self.images[perm]

self._labels = self.labels[perm]

# Start the next iteration epoch

start = 0

self._index_in_epoch = batch_size - rest_num_examples

end = self._index_in_epoch

images_new_part = self._images[start:end]

labels_new_part = self._labels[start:end]

return numpy.concatenate(

(images_rest_part, images_new_part), axis=0), numpy.concatenate(

(labels_rest_part, labels_new_part), axis=0)

# except for the first epoch, and the beginning of each epoch, the rest of the middle batch batches are processed

else:

self._index_in_epoch += batch_size# starting position: index_in_epoch

end = self._index_in_epoch# end position: start position (index_in_epoch) plus batch_size

return self._images[start:end], self._labels[start:end]# return images and their corresponding labels

3. read_data_set メソッドを定義する。

def read_data_sets(train_dir,

fake_data=False,

one_hot=False,

dtype=dtypes.float32,

reshape=True,

validation_size=5000,

seed=None,

source_url=DEFAULT_SOURCE_URL):

if fake_data:

def fake():

return DataSet(

[], [], fake_data=True, one_hot=one_hot, dtype=dtype, seed=seed)

train = fake()

validation = fake()

test = fake()

# Return the data class.

return base.Datasets(train=train, validation=validation, test=test)

if not source_url: # empty string check

source_url = DEFAULT_SOURCE_URL

TRAIN_IMAGES = 'train-images-idx3-ubyte.gz'

TRAIN_LABELS = 'train-labels-idx1-ubyte.gz'

TEST_IMAGES = 't10k-images-idx3-ubyte.gz'

TEST_LABELS = 't10k-labels-idx1-ubyte.gz'

#base module is defined in: from tensorflow.contrib.learn.python.learn.datasets import base

local_file = base.maybe_download(TRAIN_IMAGES, train_dir,

source_url + TRAIN_IMAGES)

#gfile module is defined in: from tensorflow.python.platform import gfile

with gfile.Open(local_file, 'rb') as f:

train_images = extract_images(f)

local_file = base.be_download(TRAIN_LABELS, train_dir,

source_url + TRAIN_LABELS)

with gfile.Open(local_file, 'rb') as f:

train_labels = extract_labels(f, one_hot=one_hot)

local_file = base.maybe_download(TEST_IMAGES, train_dir,

source_url + TEST_IMAGES)

with gfile.Open(local_file, 'rb') as f:

test_images = extract_images(f)

local_file = base.maybe_download(TEST_LABELS, train_dir,

source_url + TEST_LABELS)

with gfile.Open(local_file, 'rb') as f:

test_labels = extract_labels(f, one_hot=one_hot)

if not 0 <= validation_size <= len(train_images):

raise ValueError('Validation size should be between 0 and {}. Received: {}.'

.format(len(train_images), validation_size))

validation_images = train_images[:validation_size]

validation_labels = train_labels[:validation_size]

train_images = train_images[validation_size:]

train_labels = train_labels[validation_size:]

options = dict(dtype=dtype, reshape=reshape, seed=seed)

# After reading the data return the data classes for the training, validation and test sets (with their methods and properties) according to the DataSet class defined above.

train = DataSet(train_images, train_labels, **options)

validation = DataSet(validation_images, validation_labels, **options)

test = DataSet(test_images, test_labels, **options)

return base.Datasets(train=train, validation=validation, test=test)

def load_mnist(train_dir='MNIST-data'):

#return the load data function

return read_data_sets(train_dir)

参考ブログ https://blog.csdn.net/u013608336/article/details/78747102

関連

-

undefined警告 お使いのCPUは、このTensorFlowバイナリが使用するためにコンパイルされていない命令をサポートしています。AVX2

-

undefinedエラーを解決してください。お使いのCPUは、このTensorFlowバイナリが使用するためにコンパイルされていない命令をサポートしています。AVX AVX2

-

EnvironmentErrorのため、パッケージをインストールできませんでした。[Errno 13] パーミッションが拒否された問題を解決しました。

-

TypeError: int() の引数は、文字列、バイトのようなオブジェクト、または数値でなければならず、'map' ではありません。

-

TensorFlowの問題:AttributeError:'NoneType'オブジェクトには'dtype'という属性がない。

-

tensorflow Solutionに一致するディストリビューションは見つかりませんでした。

-

TensorflowでProcess finished with exit code -1073741819 (0xC0000005)が発生した場合の解決策。

-

tf.get_variable_scope() 共通の使用法

-

TensorFlow学習 - Tensorflowオブジェクト検出API (win10, CPU)

-

Keras-Yolo v3 のエラーを解決する。AttributeError: モジュール 'keras.backend' には 'control_flow_ops' という属性がありません。

最新

-

nginxです。[emerg] 0.0.0.0:80 への bind() に失敗しました (98: アドレスは既に使用中です)

-

htmlページでギリシャ文字を使うには

-

ピュアhtml+cssでの要素読み込み効果

-

純粋なhtml + cssで五輪を実現するサンプルコード

-

ナビゲーションバー・ドロップダウンメニューのHTML+CSSサンプルコード

-

タイピング効果を実現するピュアhtml+css

-

htmlの選択ボックスのプレースホルダー作成に関する質問

-

html css3 伸縮しない 画像表示効果

-

トップナビゲーションバーメニュー作成用HTML+CSS

-

html+css 実装 サイバーパンク風ボタン