Pytorch-1-TX2にpytorchをインストール(自分でやったよ)

YOLOv3によるYolov-1-TX2(VOC2007-TX2-GPU)での自作データセットの学習過程。

Yolov-2 - 深層学習の性能最適化アクセラレーションエンジン「TensorRT」を一挙紹介

Yolov--3--TensorRTにおけるYolov3パフォーマンス最適化アクセラレーション(caffeをベースにしたもの)

yolov-5 - ターゲット検出。YOLOv2アルゴリズム原理の詳細説明

yolov--8--Tensorflow による YOLO v3 の実装

yolov--10--ターゲット検出モデルのパラメータ評価指標詳細、コンセプト分析

yolov--11--YOLOv3のオリジナル学習記録、mAP、AP、リコール、精度、時間、その他評価指標計算

yolov--12--YOLOv3の原理を徹底分析、重要ポイントを解説

このブログは更新されましたので、ご覧ください。 最新ブログ : https://blog.csdn.net/qq_33869371/article/details/88591538

GPUの性能が良いディープラーニング側の組み込みプラットフォームとしてTX2のGPUの計算能力を調べると

6.2

. これは、TX2が半精度計算をよくサポートしていることを意味し、デスクトップでモデルを完全に学習させ、それをTX2に移植して半精度ランを推論に使用することで、本番着地に繋げることができます。

この記事で主に紹介したものでも TX2 で Pytorch-1.0.1 をソースコンパイルする方法 . master pytorchの数日後に1.01より

まず最初に 比較的純粋な ジェットパックシステムは、バージョン3.2-3.3(最新の4.1.1は問題なし)で問題ないので、他の非互換エラーを避けるために、TX2システムを再フラッシュした方がよいでしょう。

システムをリフレッシュするために TX2用のシステムパッケージをNVIDIAのウェブサイトから以下のようにダウンロードします。 https://developer.nvidia.com/embedded/jetpack

ステップ

次に、Pytorchのソースコードをインストールするための厳密な手順を説明します。

JetPack-3.2システムでは、一般的に2つのバージョンのpythonがあります。

python

コマンドはpython 2.7に対応し、一方

python3

コマンドは、python3.5に対応します。ここでは、コンパイル環境としてpython3を使用していますので、この2つのpythonのバージョンを区別しないと、コンパイルエラーが発生します。

これは

which python3

を使えば、python 3.5 の現在の実行環境を見ることができます。

依存関係

必ず事前に1G以上のメモリバッファをオープンしておいてください (非常に重要)、詳細は前回の記事を参照してください。 https://blog.csdn.net/qq_33869371/article/details/87706617

まず、依存関係をインストールします。

コマンドを使用していることに注意してください。

pip3

python3 に対応しているため、システム上の pip と python のバインディング情報が不明な場合は

pip --version

をクリックすると、現在のコマンドがどの Python で実行されているかを確認できます。

pip3 --version

pip3 9.0.1 from path/to/lib/python3.5/site-packages/pip (python 3.5)

とにかく python3 の pip コマンドに対応する を使用して、まずpip3をインストールし、次にpython3環境に必要なコンポーネントをいくつかインストールします。

sudo apt install libopenblas-dev libatlas-dev liblapack-dev

sudo apt install liblapacike-dev checkinstall # For OpenCV

sudo apt-get install python3-pip

pip3 install --upgrade pip3==9.0.1

sudo apt-get install python3-dev

sudo pip3 install numpy scipy # This takes a little longer, around 20 to 30 minutes

sudo pip3 install pyyaml

sudo pip3 install scikit-build

sudo apt-get -y install cmake

sudo apt install libffi-dev

sudo pip3 install cffi

インストール後、cudnnのlibとincludeのパスを追加します。このステップを実行する必要がある理由は、マシンをフラッシュした後、cudaとcudnnもインストールされましたが、JetPackのcudnnへのパスは、通常のubuntuシステムでのパスと若干異なっているからです(なぜ異なるかは、こちらを参照してください。 https://oldpan.me/archives/pytorch-gpu-ubuntu-nvidia-cuda90 ) 、環境変数にcudnnのパスを追加して有効化する必要があります。

sudo gedit ~/.bashrc

export CUDNN_LIB_DIR=/usr/lib/aarch64-linux-gnu

export CUDNN_INCLUDE_DIR=/usr/include

source ~/.bashrc

Pytorchのソースパッケージをダウンロードする

最新のPytorchのソースパッケージをgithubから直接コピーし、その上で

pip3

を使用して、必要なすべてのライブラリをインストールし、サードパーティライブラリを更新します。

# You can speed up the operation

sudo nvpmodel -m 0 # Switch the operating mode to maximum

sudo ~/jetson_clocks.sh # force on fan max speed

wget https://rpmfind.net/linux/mageia/distrib/cauldron/aarch64/media/core/release/ninja-1.9.0-1.mga7.aarch64.rpm

# The following are all ninja-1.9.0-1, if you can't open them, you can access the first part of the page

sudo add-apt-repository universe

sudo apt-get update

sudo apt-get install alien

sudo apt-get install nano

sudo alien ninja-1.8.2-3.mga7.aarch64.rpm

#If the previous line fails, proceed to <$sudo dpkg -i ninja-1.8.2-3.mga7.aarch64.deb>

sudo alien -g ninja-1.8.2-3.mga7.aarch64.rpm

cd ninja-1.8.2

sudo nano debian/control

#at architecture, add arm64 after aarch64 aarch64 to aarch64, arm64

sudo debian/rules binary

cd ...

sudo dpkg -i ninja_1.8.2-4_arm64.deb

sudo apt install ninja-build

# First download, because the latest version seems to have some compilation problems, so download the old version, this line of command is also a long time to find, under the 0.4.1 version

git clone --recursive --depth 1 https://github.com/pytorch/pytorch.git -b v${PYTORCH_VERSION=0.4.1}

https://blog.csdn.net/qq_25689397/article/details/50932575

git clone --recursive https://github.com/pytorch/pytorch.git

git clone --recursive https://gitee.com/wangdong_cn_admin/pytorch

ダウンロードは30分近く続きました~(中断の理由はネットが遅かったからです!)

python -m pip install --upgrade pip

python -m pip install cmake

nvidia@tegra-ubuntu:~$ sudo pip3 install -r requirements.txt

The directory '/home/nvidia/.cache/pip/http' or its parent directory is not owned by the current user and the cache has been disabled. If executing pip with sudo, you may want sudo's -H flag.

The directory '/home/nvidia/.cache/pip' or its parent directory is not owned by the current user and caching wheels has been disabled. check the permissions and owner of that directory. If executing pip with sudo, you may want sudo's -H flag.

Could not open requirements file: [Errno 2] No such file or directory: 'requirements.txt'

nvidia@tegra-ubuntu:~$

#Go to the folder

cd pytorch

#Update it

git submodule update --init

#Install the dependencies

sudo pip3 install -U setuptools

sudo pip3 install -r requirements.txt

Perform the installation

sudo python3 setup.py install

#Install is used directly after here, some people on the internet use the command, develop, build, experimented with it for a week and it's not right

# then install another dependency library

pip3 install tensorboardX

#download another folder

git clone https://github.com/pytorch/vision

cd vision

#install

sudo python3 setup.py install

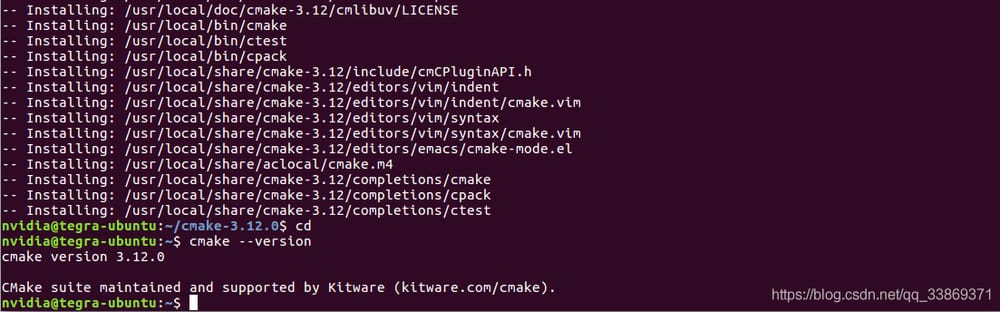

cmakeを更新します。

https://cmake.org/files/v3.14/

nvidia@tegra-ubuntu:~/pytorch-0.3.0$ git submodule update --init

nvidia@tegra-ubuntu:~/pytorch-0.3.0$ sudo python3 setup.py install

[sudo] password for nvidia:

fatal: ambiguous argument 'HEAD': unknown revision or path not in the working tree.

Use '--' to separate paths from revisions, like this:

'git <command> [<revision>...] -- [<file>...]'

running install

running build_deps

-- The C compiler identification is GNU 5.4.0

-- The CXX compiler identification is GNU 5.4.0

-- Check for working C compiler: /usr/bin/cc

-- Check for working C compiler: /usr/bin/cc -- works

-- Detecting C compiler ABI info

-- Detecting C compiler ABI info - done

-- Detecting C compile features

-- Detecting C compile features -- done

-- Check for working CXX compiler: /usr/bin/c++

-- Check for working CXX compiler: /usr/bin/c++ -- works

-- Detecting CXX compiler ABI info

-- Detecting CXX compiler ABI info - done

-- Detecting CXX compile features

-- Detecting CXX compile features - done

-- Try OpenMP C flag = [-fopenmp]

-- Performing Test OpenMP_FLAG_DETECTED

-- Performing Test OpenMP_FLAG_DETECTED - Success

-- Try OpenMP CXX flag = [-fopenmp]

-- Performing Test OpenMP_FLAG_DETECTED

-- Performing Test OpenMP_FLAG_DETECTED - Success

-- Found OpenMP: -fopenmp

-- Compiling with OpenMP support

-- Could not find hardware support for NEON on this machine.

-- No OMAP3 processor on this machine.

-- No OMAP4 processor on this machine.

-- asimd/Neon found with compiler flag : -D__NEON__

-- Looking for cpuid.h

-- Looking for cpuid.h - not found

-- Performing Test NO_GCC_EBX_FPIC_BUG

-- Performing Test NO_GCC_EBX_FPIC_BUG - Failed

...........

...............

[100%] Linking CXX static library libTHD.a

[100%] Built target THD

Install the project...

-- Install configuration: "Release"

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/lib/libTHD.a

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/include/THD/THD.h

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/include/THD/base/DataChannelRequest.h

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/include/THD/base/DataChannel.h

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/include/THD/base/THDGenerateAllTypes.h

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/include/THD/base/ChannelType.h

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/include/THD/base/Cuda.h

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/include/THD/base/TensorDescriptor.h

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/include/THD/process_group/General.h

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/include/THD/process_group/Collectives.h

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/include/THD/master_worker/master/State.h

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/include/THD/master_worker/master/generic/THDTensorRandom.h

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/include/THD/master_worker/master/generic/THDTensorMath.h

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/include/THD/master_worker/master/generic/THDTensorLapack.h

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/include/THD/master_worker/master/generic/THDTensor.h

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/include/THD/master_worker/master/generic/THDTensorCopy.h

-- Installing: /home/nvidia/pytorch-0.3.0/torch

https://blog.csdn.net/cm_cyj_1116/article/details/79316115

ダウンロードしたインストーラーを/usrのパスに置き、解凍してください。

tar zxvf cmake-3.14.0-Linux-i386.tar.gz

https://blog.csdn.net/learning_tortosie/article/details/80593956

nvidia@tegra-ubuntu:~/pytorch-0.4.1$ git submodule update --init

nvidia@tegra-ubuntu:~/pytorch-0.4.1$ sudo python3 setup.py install

[sudo] password for nvidia:

Sorry, try again.

[sudo] password for nvidia:

fatal: ambiguous argument 'HEAD': unknown revision or path not in the working tree.

Use '--' to separate paths from revisions, like this:

'git <command> [<revision>...] -- [<file>...]'

running install

running build_deps

+ USE_CUDA=0

+ USE_ROCM=0

+ USE_NNPACK=0

+ USE_MKLDNN=0

+ USE_GLOO_IBVERBS=0

+ USE_DISTRIBUTED_MW=0

+ FULL_CAFFE2=0

+ [[ 9 -gt 0 ]]

+ case "$1" in

+ USE_CUDA=1

+ shift

+ [[ 8 -gt 0 ]]

+ case "$1" in

+ USE_NNPACK=1

+ shift

+ [[ 7 -gt 0 ]]

+ case "$1" in

+ break

+ CMAKE_INSTALL='make install'

+ USER_CFLAGS=

+ USER_LDFLAGS=

+ [[ -n '' ]]

+ [[ -n '' ]]

+ [[ -n '' ]]

++ uname

+ '[' Linux == Darwin ']'

++ dirname tools/build_pytorch_libs.sh

++ cd tools/...

+++ pwd

++ printf '%q\n' /home/nvidia/pytorch-0.4.1

+ PWD=/home/nvidia/pytorch-0.4.1

+ BASE_DIR=/home/nvidia/pytorch-0.4.1

+ TORCH_LIB_DIR=/home/nvidia/pytorch-0.4.1/torch/lib

+ INSTALL_DIR=/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install

+ THIRD_PARTY_DIR=/home/nvidia/pytorch-0.4.1/third_party

+ CMAKE_VERSION=cmake

+ C_FLAGS=' -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_ install/include/TH" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/THC" -I"/home/nvidia/pytorch-0.4 .1/torch/lib/tmp_install/include/THS" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/THCS" -I"/home /nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/THNN" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/ THCUNN"'

+ C_FLAGS=' -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_ install/include/TH" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/THC" -I"/home/nvidia/pytorch-0.4 .1/torch/lib/tmp_install/include/THS" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/THCS" -I"/home /nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/THNN" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/ THCUNN" -DOMPI_SKIP_MPICXX=1'

+ LDFLAGS='-L"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/lib" '

+ LD_POSTFIX=.so

++ uname

+ [[ Linux == \D\a\r\w\i\n ]]

+ LDFLAGS='-L"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/lib" -Wl,-rpath,$ORIGIN'

+ CPP_FLAGS=' -std=c++11 '

+ GLOO_FLAGS=

+ THD_FLAGS=

+ NCCL_ROOT_DIR=/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install

+ [[ 1 -eq 1 ]]

+ GLOO_FLAGS='-DUSE_CUDA=1 -DNCCL_ROOT_DIR=/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install'

+ [[ 0 -eq 1 ]]

+ [[ 0 -eq 1 ]]

+ CWRAP_FILES='/home/nvidia/pytorch-0.4.1/torch/lib/ATen/Declarations.cwrap;/home/nvidia/pytorch-0.4.1/torch/lib/THNN/generic/THNN. h;/home/nvidia/pytorch-0.4.1/torch/lib/THCUNN/generic/THCUNN.h;/home/nvidia/pytorch-0.4.1/torch/lib/ATen/nn.yaml'

+ CUDA_NVCC_FLAGS=' -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include" -I"/home/nvidia/pytorch-0.4.1/torch/ lib/tmp_install/include/TH" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/THC" -I"/home/nvidia/ pytorch-0.4.1/torch/lib/tmp_install/include/THS" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/THCS" -I "/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/THNN" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/ include/THCUNN" -DOMPI_SKIP_MPICXX=1'

+ [[ -z '' ]]

+ CUDA_DEVICE_DEBUG=0

+ '[' -z 6 ']'

+ BUILD_TYPE=Release

+ [[ -n '' ]]

+ [[ -n '' ]]

+ echo 'Building in Release mode'

Building in Release mode

+ mkdir -p torch/lib/tmp_install

+ for arg in '"$@"'

+ [[ nccl == \n\c\c\l ]]]

+ pushd /home/nvidia/pytorch-0.4.1/third_party

~/pytorch-0.4.1/third_party ~/pytorch-0.4.1

+ build_nccl

+ mkdir -p build/nccl

+ pushd build/nccl

~/pytorch-0.4.1/third_party/build/nccl ~/pytorch-0.4.1/third_party ~/pytorch-0.4.1

+ cmake ... /... /nccl -DCMAKE_MODULE_PATH=/home/nvidia/pytorch-0.4.1/cmake/Modules_CUDA_fix -DCMAKE_BUILD_TYPE=Release -DCMAKE_INSTALL_PREFIX=/home/ nvidia/pytorch-0.4.1/torch/lib/tmp_install '-DCMAKE_C_FLAGS= -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include" -DCMAKE_C_FLAGS= -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/TH" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/ include/THC" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/THS" -I"/home/nvidia/pytorch-0.4.1/ torch/lib/tmp_install/include/THCS" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/THNN" -I"/home/ nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/THCUNN" -DOMPI_SKIP_MPICXX=1 ' '-DCMAKE_CXX_FLAGS= -I"/home/nvidia/ pytorch-0.4.1/torch/lib/tmp_install/include" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/TH" -I" /home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/THC" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include /THS" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/THCS" -I"/home/nvidia/pytorch-0.4.1/torch/lib/ tmp_install/include/THNN" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/THCUNN" -DOMPI_SKIP_MPICXX=1 - std=c++11 ' -DCMAKE_SHARED_LINKER_FLAGS= -DCMAKE_UTILS_PATH=/home/nvidia/pytorch-0.4.1/cmake/public/utils.cmake -DNUM_JOBS=6

CMake Error at /home/nvidia/pytorch-0.4.1/cmake/Modules_CUDA_fix/upstream/FindPackageHandleStandardArgs.cmake:247 (string):

string does not recognize sub-command APPEND

Call Stack (most recent call first):

/home/nvidia/pytorch-0.4.1/cmake/Modules_CUDA_fix/upstream/FindCUDA.cmake:1104 (find_package_handle_standard_args)

/home/nvidia/pytorch-0.4.1/cmake/Modules_CUDA_fix/FindCUDA.cmake:11 (include)

CMakeLists.txt:5 (FIND_PACKAGE)

CMake Error at /home/nvidia/pytorch-0.4.1/cmake/Modules_CUDA_fix/upstream/FindPackageHandleStandardArgs.cmake:247 (string):

string does not recognize sub-command APPEND

Call Stack (most recent call first):

/home/nvidia/pytorch-0.4.1/cmake/Modules_CUDA_fix/upstream/

nvidia@tegra-ubuntu:~$ git clone --recursive https://github.com/pytorch/pytorch.git

Cloning into 'pytorch'...

remote: Enumerating objects: 317, done.

remote: Counting objects: 100% (317/317), done.

remote: Compressing objects: 100% (217/217), done.

error: RPC failed; curl 56 GnuTLS recv error (-24): Decryption has failed.

fatal: The remote end hung up unexpectedly

fatal: early EOF

fatal: index-pack failed

[ 64%] Building CXX object caffe2/CMakeFiles/caffe2.dir/sgd/clip_tensor_op.cc.o

[ 64%] Building CXX object caffe2/CMakeFiles/caffe2.dir/sgd/ftrl_op.cc.o

[ 64%] Building CXX object caffe2/CMakeFiles/caffe2.dir/sgd/gftrl_op.cc.o

[ 64%] Building CXX object caffe2/CMakeFiles/caffe2.dir/sgd/iter_op.cc.o

[ 64%] Building CXX object caffe2/CMakeFiles/caffe2.dir/sgd/lars_op.cc.o

[ 64%] Building CXX object caffe2/CMakeFiles/caffe2.dir/sgd/learning_rate_adaption_op.cc.o

[ 64%] Building CXX object caffe2/CMakeFiles/caffe2.dir/sgd/learning_rate_op.cc.o

[ 65%] Building CXX object caffe2/CMakeFiles/caffe2.dir/sgd/momentum_sgd_op.cc.o

[ 65%] Building CXX object caffe2/CMakeFiles/caffe2.dir/sgd/rmsprop_op.cc.o

[ 65%] Building CXX object caffe2/CMakeFiles/caffe2.dir/sgd/wngrad_op.cc.o

[ 65%] Building CXX object caffe2/CMakeFiles/caffe2.dir/sgd/yellowfin_op.cc.o

[ 65%] Building CXX object caffe2/CMakeFiles/caffe2.dir/share/contrib/nnpack/conv_op.cc.o

[ 65%] Building CXX object caffe2/CMakeFiles/caffe2.dir/share/contrib/depthwise/depthwise3x3_conv_op.cc.o

[ 65%] Building CXX object caffe2/CMakeFiles/caffe2.dir/transforms/common_subexpression_elimination.cc.o

[ 65%] Building CXX object caffe2/CMakeFiles/caffe2.dir/transforms/conv_to_nnpack_transform.cc.o

[ 65%] Building CXX object caffe2/CMakeFiles/caffe2.dir/transforms/pattern_net_transform.cc.o

[ 65%] Building CXX object caffe2/CMakeFiles/caffe2.dir/transforms/single_op_transform.cc.o

[ 65%] Linking CXX shared library . /lib/libcaffe2.so

[ 65%] Built target caffe2

Makefile:140: recipe for target 'all' failed

make: *** [all] Error 2

Failed to run 'bash ... /tools/build_pytorch_libs.sh --use-cuda --use-fbgemm --use-nnpack --use-qnnpack caffe2'

nvidia@tegra-ubuntu:~/pytorch-stable$

#Go to the folder

cd pytorch

#Update it

git submodule update --init

#Install the dependencies

sudo pip3 install -U setuptools

sudo pip3 install -r requirements.txt

Perform the installation

sudo python3 setup.py install

#Install is used directly after here, some people on the internet use the command, develop, build, experimented with it for a week and it's not right

# then install another dependency library

pip3 install tensorboardX

#download another folder

git clone https://github.com/pytorch/vision

cd vision

#install

sudo python3 setup.py install

質問です。

sudo python3 setup.py install

fatal: あいまいな引数 'HEAD': 未知のリビジョンまたはパスが作業ツリーにありません。

リビジョンとパスを分けるには、次のように「--」を使用します。

'git <command> [<revision>...] -- [<file>...]' とします。

ホイール torch-1.1.0a0 のビルド

-- ビルドバージョン 1.1.0a0

ホーム/nvidia/pytorch/third_party/gloo/CMakeLists.txt が見つかりませんでした。

git submodule update --init --recursive'を実行しましたか?

nvidia@tegra-ubuntu:~/pytorch-0.3.0$ git submodule update --init

nvidia@tegra-ubuntu:~/pytorch-0.3.0$ sudo python3 setup.py install

[sudo] password for nvidia:

fatal: ambiguous argument 'HEAD': unknown revision or path not in the working tree.

Use '--' to separate paths from revisions, like this:

'git <command> [<revision>...] -- [<file>...]'

running install

running build_deps

-- The C compiler identification is GNU 5.4.0

-- The CXX compiler identification is GNU 5.4.0

-- Check for working C compiler: /usr/bin/cc

-- Check for working C compiler: /usr/bin/cc -- works

-- Detecting C compiler ABI info

-- Detecting C compiler ABI info - done

-- Detecting C compile features

-- Detecting C compile features -- done

-- Check for working CXX compiler: /usr/bin/c++

-- Check for working CXX compiler: /usr/bin/c++ -- works

-- Detecting CXX compiler ABI info

-- Detecting CXX compiler ABI info - done

-- Detecting CXX compile features

-- Detecting CXX compile features - done

-- Try OpenMP C flag = [-fopenmp]

-- Performing Test OpenMP_FLAG_DETECTED

-- Performing Test OpenMP_FLAG_DETECTED - Success

-- Try OpenMP CXX flag = [-fopenmp]

-- Performing Test OpenMP_FLAG_DETECTED

-- Performing Test OpenMP_FLAG_DETECTED - Success

-- Found OpenMP: -fopenmp

-- Compiling with OpenMP support

-- Could not find hardware support for NEON on this machine.

-- No OMAP3 processor on this machine.

-- No OMAP4 processor on this machine.

-- asimd/Neon found with compiler flag : -D__NEON__

-- Looking for cpuid.h

-- Looking for cpuid.h - not found

-- Performing Test NO_GCC_EBX_FPIC_BUG

-- Performing Test NO_GCC_EBX_FPIC_BUG - Failed

...........

...............

[100%] Linking CXX static library libTHD.a

[100%] Built target THD

Install the project...

-- Install configuration: "Release"

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/lib/libTHD.a

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/include/THD/THD.h

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/include/THD/base/DataChannelRequest.h

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/include/THD/base/DataChannel.h

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/include/THD/base/THDGenerateAllTypes.h

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/include/THD/base/ChannelType.h

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/include/THD/base/Cuda.h

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/include/THD/base/TensorDescriptor.h

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/include/THD/process_group/General.h

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/include/THD/process_group/Collectives.h

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/include/THD/master_worker/master/State.h

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/include/THD/master_worker/master/generic/THDTensorRandom.h

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/include/THD/master_worker/master/generic/THDTensorMath.h

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/include/THD/master_worker/master/generic/THDTensorLapack.h

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/include/THD/master_worker/master/generic/THDTensor.h

-- Installing: /home/nvidia/pytorch-0.3.0/torch/lib/tmp_install/include/THD/master_worker/master/generic/THDTensorCopy.h

-- Installing: /home/nvidia/pytorch-0.3.0/torch

https://devtalk.nvidia.com/default/topic/1041716/jetson-agx-xavier/pytorch-install-problem/

nvidia@tegra-ubuntu:~/pytorch-0.4.1$ git submodule update --init

nvidia@tegra-ubuntu:~/pytorch-0.4.1$ sudo python3 setup.py install

[sudo] password for nvidia:

Sorry, try again.

[sudo] password for nvidia:

fatal: ambiguous argument 'HEAD': unknown revision or path not in the working tree.

Use '--' to separate paths from revisions, like this:

'git <command> [<revision>...] -- [<file>...]'

running install

running build_deps

+ USE_CUDA=0

+ USE_ROCM=0

+ USE_NNPACK=0

+ USE_MKLDNN=0

+ USE_GLOO_IBVERBS=0

+ USE_DISTRIBUTED_MW=0

+ FULL_CAFFE2=0

+ [[ 9 -gt 0 ]]

+ case "$1" in

+ USE_CUDA=1

+ shift

+ [[ 8 -gt 0 ]]

+ case "$1" in

+ USE_NNPACK=1

+ shift

+ [[ 7 -gt 0 ]]

+ case "$1" in

+ break

+ CMAKE_INSTALL='make install'

+ USER_CFLAGS=

+ USER_LDFLAGS=

+ [[ -n '' ]]

+ [[ -n '' ]]

+ [[ -n '' ]]

++ uname

+ '[' Linux == Darwin ']'

++ dirname tools/build_pytorch_libs.sh

++ cd tools/...

+++ pwd

++ printf '%q\n' /home/nvidia/pytorch-0.4.1

+ PWD=/home/nvidia/pytorch-0.4.1

+ BASE_DIR=/home/nvidia/pytorch-0.4.1

+ TORCH_LIB_DIR=/home/nvidia/pytorch-0.4.1/torch/lib

+ INSTALL_DIR=/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install

+ THIRD_PARTY_DIR=/home/nvidia/pytorch-0.4.1/third_party

+ CMAKE_VERSION=cmake

+ C_FLAGS=' -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_ install/include/TH" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/THC" -I"/home/nvidia/pytorch-0.4 .1/torch/lib/tmp_install/include/THS" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/THCS" -I"/home /nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/THNN" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/ THCUNN"'

+ C_FLAGS=' -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_ install/include/TH" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/THC" -I"/home/nvidia/pytorch-0.4 .1/torch/lib/tmp_install/include/THS" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/THCS" -I"/home /nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/THNN" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/ THCUNN" -DOMPI_SKIP_MPICXX=1'

+ LDFLAGS='-L"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/lib" '

+ LD_POSTFIX=.so

++ uname

+ [[ Linux == \D\a\r\w\i\n ]]

+ LDFLAGS='-L"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/lib" -Wl,-rpath,$ORIGIN'

+ CPP_FLAGS=' -std=c++11 '

+ GLOO_FLAGS=

+ THD_FLAGS=

+ NCCL_ROOT_DIR=/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install

+ [[ 1 -eq 1 ]]

+ GLOO_FLAGS='-DUSE_CUDA=1 -DNCCL_ROOT_DIR=/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install'

+ [[ 0 -eq 1 ]]

+ [[ 0 -eq 1 ]]

+ CWRAP_FILES='/home/nvidia/pytorch-0.4.1/torch/lib/ATen/Declarations.cwrap;/home/nvidia/pytorch-0.4.1/torch/lib/THNN/generic/THNN. h;/home/nvidia/pytorch-0.4.1/torch/lib/THCUNN/generic/THCUNN.h;/home/nvidia/pytorch-0.4.1/torch/lib/ATen/nn.yaml'

+ CUDA_NVCC_FLAGS=' -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include" -I"/home/nvidia/pytorch-0.4.1/torch/ lib/tmp_install/include/TH" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/THC" -I"/home/nvidia/ pytorch-0.4.1/torch/lib/tmp_install/include/THS" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/THCS" -I "/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/THNN" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/ include/THCUNN" -DOMPI_SKIP_MPICXX=1'

+ [[ -z '' ]]

+ CUDA_DEVICE_DEBUG=0

+ '[' -z 6 ']'

+ BUILD_TYPE=Release

+ [[ -n '' ]]

+ [[ -n '' ]]

+ echo 'Building in Release mode'

Building in Release mode

+ mkdir -p torch/lib/tmp_install

+ for arg in '"$@"'

+ [[ nccl == \n\c\c\l ]]]

+ pushd /home/nvidia/pytorch-0.4.1/third_party

~/pytorch-0.4.1/third_party ~/pytorch-0.4.1

+ build_nccl

+ mkdir -p build/nccl

+ pushd build/nccl

~/pytorch-0.4.1/third_party/build/nccl ~/pytorch-0.4.1/third_party ~/pytorch-0.4.1

+ cmake ... /... /nccl -DCMAKE_MODULE_PATH=/home/nvidia/pytorch-0.4.1/cmake/Modules_CUDA_fix -DCMAKE_BUILD_TYPE=Release -DCMAKE_INSTALL_PREFIX=/home/ nvidia/pytorch-0.4.1/torch/lib/tmp_install '-DCMAKE_C_FLAGS= -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include" -DCMAKE_C_FLAGS= -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/TH" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/ include/THC" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/THS" -I"/home/nvidia/pytorch-0.4.1/ torch/lib/tmp_install/include/THCS" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/THNN" -I"/home/ nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/THCUNN" -DOMPI_SKIP_MPICXX=1 ' '-DCMAKE_CXX_FLAGS= -I"/home/nvidia/ pytorch-0.4.1/torch/lib/tmp_install/include" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/TH" -I" /home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/THC" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include /THS" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/THCS" -I"/home/nvidia/pytorch-0.4.1/torch/lib/ tmp_install/include/THNN" -I"/home/nvidia/pytorch-0.4.1/torch/lib/tmp_install/include/THCUNN" -DOMPI_SKIP_MPICXX=1 - std=c++11 ' -DCMAKE_SHARED_LINKER_FLAGS= -DCMAKE_UTILS_PATH=/home/nvidia/pytorch-0.4.1/cmake/public/utils.cmake -DNUM_JOBS=6

CMake Error at /home/nvidia/pytorch-0.4.1/cmake/Modules_CUDA_fix/upstream/FindPackageHandleStandardArgs.cmake:247 (string):

string does not recognize sub-command APPEND

Call Stack (most recent call first):

/home/nvidia/pytorch-0.4.1/cmake/Modules_CUDA_fix/upstream/FindCUDA.cmake:1104 (find_package_handle_standard_args)

/home/nvidia/pytorch-0.4.1/cmake/Modules_CUDA_fix/FindCUDA.cmake:11 (include)

CMakeLists.txt:5 (FIND_PACKAGE)

CMake Error at /home/nvidia/pytorch-0.4.1/cmake/Modules_CUDA_fix/upstream/FindPackageHandleStandardArgs.cmake:247 (string):

string does not recognize sub-command APPEND

Call Stack (most recent call first):

/home/nvidia/pytorch-0.4.1/cmake/Modules_CUDA_fix/upstream/

nvidia@tegra-ubuntu:~$ git clone --recursive https://github.com/pytorch/pytorch.git

Cloning into 'pytorch'...

remote: Enumerating objects: 317, done.

remote: Counting objects: 100% (317/317), done.

remote: Compressing objects: 100% (217/217), done.

error: RPC failed; curl 56 GnuTLS recv error (-24): Decryption has failed.

fatal: The remote end hung up unexpectedly

fatal: early EOF

fatal: index-pack failed

[ 64%] Building CXX object caffe2/CMakeFiles/caffe2.dir/sgd/clip_tensor_op.cc.o

[ 64%] Building CXX object caffe2/CMakeFiles/caffe2.dir/sgd/ftrl_op.cc.o

[ 64%] Building CXX object caffe2/CMakeFiles/caffe2.dir/sgd/gftrl_op.cc.o

[ 64%] Building CXX object caffe2/CMakeFiles/caffe2.dir/sgd/iter_op.cc.o

[ 64%] Building CXX object caffe2/CMakeFiles/caffe2.dir/sgd/lars_op.cc.o

[ 64%] Building CXX object caffe2/CMakeFiles/caffe2.dir/sgd/learning_rate_adaption_op.cc.o

[ 64%] Building CXX object caffe2/CMakeFiles/caffe2.dir/sgd/learning_rate_op.cc.o

[ 65%] Building CXX object caffe2/CMakeFiles/caffe2.dir/sgd/momentum_sgd_op.cc.o

[ 65%] Building CXX object caffe2/CMakeFiles/caffe2.dir/sgd/rmsprop_op.cc.o

[ 65%] Building CXX object caffe2/CMakeFiles/caffe2.dir/sgd/wngrad_op.cc.o

[ 65%] Building CXX object caffe2/CMakeFiles/caffe2.dir/sgd/yellowfin_op.cc.o

[ 65%] Building CXX object caffe2/CMakeFiles/caffe2.dir/share/contrib/nnpack/conv_op.cc.o

[ 65%] Building CXX object caffe2/CMakeFiles/caffe2.dir/share/contrib/depthwise/depthwise3x3_conv_op.cc.o

[ 65%] Building CXX object caffe2/CMakeFiles/caffe2.dir/transforms/common_subexpression_elimination.cc.o

[ 65%] Building CXX object caffe2/CMakeFiles/caffe2.dir/transforms/conv_to_nnpack_transform.cc.o

[ 65%] Building CXX object caffe2/CMakeFiles/caffe2.dir/transforms/pattern_net_transform.cc.o

[ 65%] Building CXX object caffe2/CMakeFiles/caffe2.dir/transforms/single_op_transform.cc.o

[ 65%] Linking CXX shared library . /lib/libcaffe2.so

[ 65%] Built target caffe2

Makefile:140: recipe for target 'all' failed

make: *** [all] Error 2

Failed to run 'bash ... /tools/build_pytorch_libs.sh --use-cuda --use-fbgemm --use-nnpack --use-qnnpack caffe2'

nvidia@tegra-ubuntu:~/pytorch-stable$

参考

https://m.oldpan.me/archives/nvidia-jetson-tx2-source-build-pytorch

https://blog.csdn.net/qq_36118564/article/details/85417331

https://blog.csdn.net/zsfcg/article/details/84099869

https://rpmfind.net/linux/mageia/distrib/cauldron/aarch64/media/core/release/

関連

-

[解決済み】pytorchでテンソルを平らにする方法は?

-

[解決済み] Pytorch ある割合で特定の値を持つランダムなint型テンソルを作成する方法は?例えば、25%が1で残りが0というような。

-

[Centernet recurrence] AttributeError:Can't pickle local object 'get_dataset.<locals>.Dataset

-

AttributeError NoneType オブジェクトに属性データがない。

-

ピトーチリピートの使用方法

-

pytorch学習におけるtorch.squeeze()とtorch.unsqueeze()の使用法

-

Pytorch torch.Tensor.detach()メソッドの使い方と、指定したモジュールの重みを変更する方法

-

torch.stack()の使用

-

torch.stack()の公式解説、詳細、例題について

-

pytorch-DataLoader (データイテレータ)

最新

-

nginxです。[emerg] 0.0.0.0:80 への bind() に失敗しました (98: アドレスは既に使用中です)

-

htmlページでギリシャ文字を使うには

-

ピュアhtml+cssでの要素読み込み効果

-

純粋なhtml + cssで五輪を実現するサンプルコード

-

ナビゲーションバー・ドロップダウンメニューのHTML+CSSサンプルコード

-

タイピング効果を実現するピュアhtml+css

-

htmlの選択ボックスのプレースホルダー作成に関する質問

-

html css3 伸縮しない 画像表示効果

-

トップナビゲーションバーメニュー作成用HTML+CSS

-

html+css 実装 サイバーパンク風ボタン