OpenCV-Butterworth ローパスおよびハイパスフィルタ (C++)

2022-02-11 07:14:06

著者 スティーブン・ザイ ティエンバオ

著作権について 著作権は著者に帰属します。商業的な複製は著者に、非商業的な複製は出典を明記してください。

シナリオの要件

画像処理を行う場合、フィルタリングはホームランです。今日はバターワースフィルタの実装を紹介します。

スペクトルにおいて、低周波は主に滑らかな領域の画像全体の階調分布に対応し、高周波はエッジやノイズなど画像の細かい部分に対応することはよく知られている。バターワースフィルタは、最大フラットフィルタと呼ばれる。通過帯域ではリップルのない最大にフラットな周波数応答曲線を描き、停止帯域では徐々に減少してゼロになるのが特徴で、公式や具体的な原理はもはや記載されていません、Baiduはすべて持っています、次は難しいところですが、C++ & OpenCVのコード実装です。

関連する関数コードのC++実装

// Butterworth low-pass filter kernel function

cv::Mat butterworth_low_kernel(cv::Mat &scr, float sigma, int n)

{

cv::Mat butterworth_low_pass(scr.size(), CV_32FC1); //, CV_32FC1

float D0 = sigma;// the smaller the radius D0, the larger the blur; the larger the radius D0, the smaller the blur

for (int i = 0; i < scr.rows; i++) {

for (int j = 0; j < scr.cols; j++) {

float d = sqrt(pow(float(i - scr.rows / 2), 2) + pow(float(j - scr.cols / 2), 2));//numerator, compute pow must be float type

butterworth_low_pass.at<float>(i, j) = 1.0f / (1.0f + pow(d / D0, 2 * n));

}

}

return butterworth_low_pass;

}

// Butterworth low-pass filtering

cv::Mat butterworth_low_pass_filter(cv::Mat &src, float d0, int n)

{

// H = 1 / (1+(D/D0)^2n) n denotes the number of Butterworth filters

// order n=1 no ringing and negative order n=2 slight ringing and negative order n=5 significant ringing and negative order n=20 similar to ILPF

cv::Mat padded = image_make_border(src);

cv::Mat butterworth_kernel = butterworth_low_kernel(padded, d0, n);

cv::Mat result = frequency_filter(padded, butterworth_kernel);

return result;

}

// Butterworth high-pass filtering kernel function

cv::Mat butterworth_high_kernel(cv::Mat &scr, float sigma, int n)

{

cv::Mat butterworth_high_pass(scr.size(), CV_32FC1); //, CV_32FC1

float D0 = (float)sigma; // the smaller the radius D0, the larger the blur; the larger the radius D0, the smaller the blur

for (int i = 0; i < scr.rows; i++) {

for (int j = 0; j < scr.cols; j++) {

float d = sqrt(pow(float(i - scr.rows / 2), 2) + pow(float(j - scr.cols / 2), 2));//numerator, compute pow must be float type

butterworth_high_pass.at<float>(i, j) = 1.0f-1.0f / (1.0f + pow(d / D0, 2 * n));

}

}

return butterworth_high_pass;

}

// Butterworth high pass filtering

cv::Mat butterworth_high_pass_filter(cv::Mat &src, float d0, int n)

{

cv::Mat padded = image_make_border(src);

cv::Mat butterworth_kernel = butterworth_high_kernel(padded, d0, n);

cv::Mat result = frequency_filter(padded, butterworth_kernel);

return result;

}

// Frequency filtering

cv::Mat frequency_filter(cv::Mat &scr, cv::Mat &blur)

{

cv::Mat mask = scr == scr;

scr.setTo(0.0f, ~mask);

//create channel, store real and imaginary parts after dft (CV_32F, must be a single channel number)

cv::Mat plane[] = { scr.clone(), cv::Mat::zeros(scr.size() , CV_32FC1) };

cv::Mat complexIm;

cv::merge(plane, 2, complexIm); // merge channels (merge two matrices into a 2-channel Mat-like container)

cv::dft(complexIm, complexIm); // perform Fourier transform and save the result in itself

// separate channels (array separation)

cv::split(complexIm, plane);

// The following operation is frequency domain migration

fftshift(plane[0], plane[1]);

// product of ***************** filter function and DFT result ****************

cv::Mat blur_r, blur_i, BLUR;

cv::multiply(plane[0], blur, blur_r); // filter (real part multiplied by the corresponding element of the filter template)

cv::multiply(plane[1], blur, blur_i); // filter (the imaginary part is multiplied by the corresponding element of the filter template)

cv::Mat plane1[] = { blur_r, blur_i };

// move back again for inversion

fftshift(plane1[0], plane1[1]);

cv::merge(plane1, 2, BLUR); // merge the real part with the imaginary part

cv::idft(BLUR, BLUR); // idft also results in a complex number

BLUR = BLUR / BLUR.rows / BLUR.cols;

cv::split(BLUR, plane); // separate channels, mainly to get channels

return plane[0];

}

// Image boundary processing

cv::Mat image_make_border(cv::Mat &src)

{

int w = cv::getOptimalDFTSize(src.cols); // Get the optimal width of the DFT transform

int h = cv::getOptimalDFTSize(src.rows); // get the optimal height of the DFT transformation

cv::Mat padded;

// constant method to expand image boundaries, constant = 0

cv::copyMakeBorder(src, padded, 0, h - src.rows, 0, w - src.cols, cv::BORDER_CONSTANT, cv::Scalar::all(0));

padded.convertTo(padded, CV_32FC1);

return padded;

}

// implement the grid function of the frequency domain filter

void getcart(int rows, int cols, cv::Mat &x, cv::Mat &y) {

x.create(rows, cols, CV_32FC1);

y.create(rows, cols, CV_32FC1);

// set the boundaries

// calculate the values of other positions

for (int i = 0; i < rows; ++i) {

if (i <= rows / 2) {

x.row(i) = i;

}

else {

x.row(i) = i - rows;

}

}

for (int i = 0; i < cols; ++i) {

if (i <= cols / 2) {

y.col(i) = i;

}

else {

y.col(i) = i - cols;

}

}

}

// fft transform followed by spectrum shift

void fftshift(cv::Mat &plane0, cv::Mat &plane1)

{

// The following operation moves the image (zero frequency shift to the center)

int cx = plane0.cols / 2;

int cy = plane0.rows / 2;

cv::Mat part1_r(plane0, cv::Rect(0, 0, cx, cy)); // element coordinates are represented as (cx, cy)

cv::Mat part2_r(plane0, cv::Rect(cx, 0, cx, cy));

cv::Mat part3_r(plane0, cv::Rect(0, cy, cx, cy));

cv::Mat part4_r(plane0, cv::Rect(cx, cy, cx, cy));

cv::Mat temp;

part1_r.copyTo(temp); //swap top-left and bottom-right positions (real part)

part4_r.copyTo(part1_r);

temp.copyTo(part4_r);

part2_r.copyTo(temp); //exchange position between top-right and bottom-left (real part)

part3_r.copyTo(part2_r);

temp.copyTo(part3_r);

cv::Mat part1_i(plane1, cv::Rect(0, 0, cx, cy)); //element coordinates (cx,cy)

cv::Mat part2_i(plane1, cv::Rect(cx, 0, cx, cy));

cv::Mat part3_i(plane1, cv::Rect(0, cy, cx, cy));

cv::Mat part4_i(plane1, cv::Rect(cx, cy, cx, cy));

part1_i.copyTo(temp); //swap top-left and bottom-right positions (imaginary part)

part4_i.copyTo(part1_i);

temp.copyTo(part4_i);

part2_i.copyTo(temp); //exchange position between upper right and lower left (imaginary part)

part3_i.copyTo(part2_i);

temp.copyTo(part3_i);

}

テストコード

#include<iostream>

#include<opencv2/opencv.hpp>

#include<ctime>

using namespace std;

using namespace cv;

cv::Mat butterworth_low_kernel(cv::Mat &scr, float sigma, int n);

cv::Mat butterworth_low_pass_filter(cv::Mat &src, float d0, int n);

cv::Mat butterworth_high_kernel(cv::Mat &scr, float sigma, int n);

cv::Mat butterworth_high_pass_filter(cv::Mat &src, float d0, int n);

cv::Mat frequency_filter(cv::Mat &scr, cv::Mat &blur);

cv::Mat image_make_border(cv::Mat &src);

void fftshift(cv::Mat &plane0, cv::Mat &plane1);

void getcart(int rows, int cols, cv::Mat &x, cv::Mat &y);

Mat powZ(cv::InputArray src, double power);

Mat sqrtZ(cv::InputArray src);

int main(void)

{

Mat test = imread("tangsan.jpg", 0);

float D0 = 50.0f;

float D1 = 5.0f;

Mat lowpass = butterworth_low_pass_filter(test, D0,2);

Mat highpass = butterworth_high_pass_filter(test, D1, 2);

imshow("original", test);

imshow("low pass", lowpass / 255); // lowpass data are relatively large, 0-255, imshow for float type Mat display need to divide by 255

imshow("high pass", highpass / 255); // highpass data are relatively large, 0-255, imshow for the float type Mat display need to divide by 255

waitKey(0);

system("pause");

return 0;

}

// Butterworth low-pass filter kernel function

cv::Mat butterworth_low_kernel(cv::Mat &scr, float sigma, int n)

{

cv::Mat butterworth_low_pass(scr.size(), CV_32FC1); //, CV_32FC1

float D0 = sigma;// the smaller the radius D0, the larger the blur; the larger the radius D0, the smaller the blur

for (int i = 0; i < scr.rows; i++) {

for (int j = 0; j < scr.cols; j++) {

float d = sqrt(pow(float(i - scr.rows / 2), 2) + pow(float(j - scr.cols / 2), 2));//numerator, compute pow must be float type

butterworth_low_pass.at<float>(i, j) = 1.0f / (1.0f + pow(d / D0, 2 * n));

}

}

return butterworth_low_pass;

}

// Butterworth low-pass filtering

cv::Mat butterworth_low_pass_filter(cv::Mat &src, float d0, int n)

{

// H = 1 / (1+(D/D0)^2n) n denotes the number of Butterworth filters

// order n=1 no ringing and negative order n=2 slight ringing and negative order n=5 significant ringing and negative order n=20 similar to ILPF

cv::Mat padded = image_make_border(src);

cv::Mat butterworth_kernel = butterworth_low_kernel(padded, d0, n);

cv::Mat result = frequency_filter(padded, butterworth_kernel);

return result;

}

// Butterworth high-pass filtering kernel function

cv::Mat butterworth_high_kernel(cv::Mat &scr, float sigma, int n)

{

cv::Mat butterworth_high_pass(scr.size(), CV_32FC1); //, CV_32FC1

float D0 = (float)sigma; // the smaller the radius D0, the larger the blur; the larger the radius D0, the smaller the blur

for (int i = 0; i < scr.rows; i++) {

for (int j = 0; j < scr.cols; j++) {

float d = sqrt(pow(float(i - scr.rows / 2), 2) + pow(float(j - scr.cols / 2), 2));//numerator, compute pow must be float type

butterworth_high_pass.at<float>(i, j) = 1.0f-1.0f / (1.0f + pow(d / D0, 2 * n));

}

}

return butterworth_high_pass;

}

// Butterworth high pass filtering

cv::Mat butterworth_high_pass_filter(cv::Mat &src, float d0, int n)

{

cv::Mat padded = image_make_border(src);

cv::Mat butterworth_kernel = butterworth_high_kernel(padded, d0, n);

cv::Mat result = frequency_filter(padded, butterworth_kernel);

return result;

}

// Frequency filtering

cv::Mat frequency_filter(cv::Mat &scr, cv::Mat &blur)

{

cv::Mat mask = scr == scr;

scr.setTo(0.0f, ~mask);

//create channel, store real and imaginary parts after dft (CV_32F, must be a single channel number)

cv::Mat plane[] = { scr.clone(), cv::Mat::zeros(scr.size() , CV_32FC1) };

cv::Mat complexIm;

cv::merge(plane, 2, complexIm); // merge channels (merge two matrices into a 2-channel Mat-like container)

cv::dft(complexIm, complexIm); // perform Fourier transform and save the result in itself

// separate channels (array separation)

cv::split(complexIm, plane);

// The following operation is a frequency domain migration

fftshift(plane[0], plane[1]);

// product of ***************** filter function and DFT result ****************

cv::Mat blur_r, blur_i, BLUR;

cv::multiply(plane[0], blur, blur_r); // filter (real part multiplied by the corresponding element of the filter template)

cv::multiply(plane[1], blur, blur_i); // filter (the imaginary part is multiplied by the corresponding element of the filter template)

cv::Mat plane1[] = { blur_r, blur_i };

// move back again for inversion

fftshift(plane1[0], plane1[1]);

cv::merge(plane1, 2, BLUR); // merge the real part with the imaginary part

cv::idft(BLUR, BLUR); // idft also results in a complex number

BLUR = BLUR / BLUR.rows / BLUR.cols;

cv::split(BLUR, plane); // separate channels, mainly to get channels

return plane[0];

}

// Image boundary processing

cv::Mat image_make_border(cv::Mat &src)

{

int w = cv::getOptimalDFTSize(src.cols); // Get the optimal width of the DFT transform

int h = cv::getOptimalDFTSize(src.rows); // Get the optimal height of the DFT transformation

cv::Mat padded;

// constant method to expand image boundaries, constant = 0

cv::copyMakeBorder(src, padded, 0, h - src.rows, 0, w - src.cols, cv::BORDER_CONSTANT, cv::Scalar::all(0));

padded.convertTo(padded, CV_32FC1);

return padded;

}

// implement the grid function of the frequency domain filter

void getcart(int rows, int cols, cv::Mat &x, cv::Mat &y) {

x.create(rows, cols, CV_32FC1);

y.create(rows, cols, CV_32FC1);

// set the boundaries

// calculate the values of other positions

for (int i = 0; i < rows; ++i) {

if (i <= rows / 2) {

x.row(i) = i;

}

else {

x.row(i) = i - rows;

}

}

for (int i = 0; i < cols; ++i) {

if (i <= cols / 2) {

y.col(i) = i;

}

else {

y.col(i) = i - cols;

}

}

}

// fft transform followed by spectrum shift

void fftshift(cv::Mat &plane0, cv::Mat &plane1)

{

// The following operation moves the image (zero frequency shift to the center)

int cx = plane0.cols / 2;

int cy = plane0.rows / 2;

cv::Mat part1_r(plane0, cv::Rect(0, 0, cx, cy)); // element coordinates are represented as (cx, cy)

cv::Mat part2_r(plane0, cv::Rect(cx, 0, cx, cy));

cv::Mat part3_r(plane0, cv::Rect(0, cy, cx, cy));

cv::Mat part4_r(plane0, cv::Rect(cx, cy, cx, cy));

cv::Mat temp;

part1_r.copyTo(temp); //swap top-left and bottom-right positions (real part)

part4_r.copyTo(part1_r);

temp.copyTo(part4_r);

part2_r.copyTo(temp); //exchange position between top-right and bottom-left (real part)

part3_r.copyTo(part2_r);

temp.copyTo(part3_r);

cv::Mat part1_i(plane1, cv::Rect(0, 0, cx, cy)); //element coordinates (cx,cy)

cv::Mat part2_i(plane1, cv::Rect(cx, 0, cx, cy));

cv::Mat part3_i(plane1, cv::Rect(0, cy, cx, cy));

cv::Mat part4_i(plane1, cv::Rect(cx, cy, cx, cy));

part1_i.copyTo(temp); //swap top-left and bottom-right positions (imaginary part)

part4_i.copyTo(part1_i);

temp.copyTo(part4_i);

part2_i.copyTo(temp); //exchange position between upper right and lower left (imaginary part)

part3_i.copyTo(part2_i);

temp.copyTo(part3_i);

}

Mat powZ(cv::InputArray src, double power) {

cv::Mat dst;

cv::pow(src, power, dst);

return dst;

}

Mat sqrtZ(cv::InputArray src) {

cv::Mat dst;

cv::sqrt(src, dst);

return dst;

}

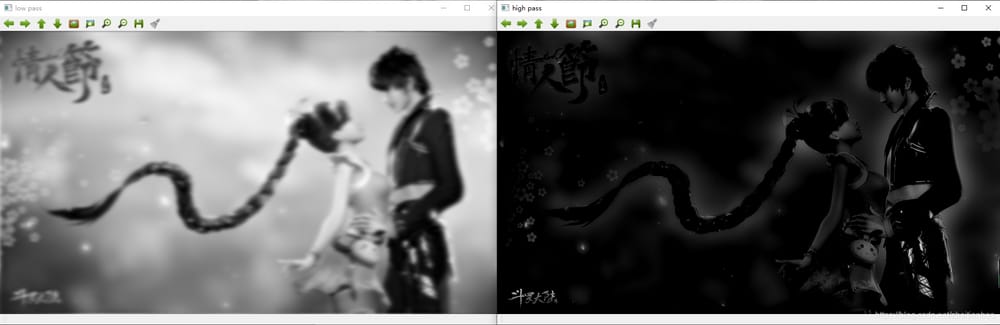

テスト結果

フィルタパラメータが異なると、フィルタサイズも異なり、結果も異なる〜。

また、私のコードに何か問題があれば、遠慮なく反対や批判をしてください、そうすれば一緒に改善できますよ〜。

もしこの記事が役に立ったなら、いいね!で教えてくれたらうれしいです~!乾杯

関連

最新

-

nginxです。[emerg] 0.0.0.0:80 への bind() に失敗しました (98: アドレスは既に使用中です)

-

htmlページでギリシャ文字を使うには

-

ピュアhtml+cssでの要素読み込み効果

-

純粋なhtml + cssで五輪を実現するサンプルコード

-

ナビゲーションバー・ドロップダウンメニューのHTML+CSSサンプルコード

-

タイピング効果を実現するピュアhtml+css

-

htmlの選択ボックスのプレースホルダー作成に関する質問

-

html css3 伸縮しない 画像表示効果

-

トップナビゲーションバーメニュー作成用HTML+CSS

-

html+css 実装 サイバーパンク風ボタン

おすすめ

-

ハートビート・エフェクトのためのHTML+CSS

-

HTML ホテル フォームによるフィルタリング

-

HTML+cssのボックスモデル例(円、半円など)「border-radius」使いやすい

-

HTMLテーブルのテーブル分割とマージ(colspan, rowspan)

-

ランダム・ネームドロッパーを実装するためのhtmlサンプルコード

-

Html階層型ボックスシャドウ効果サンプルコード

-

QQの一時的なダイアログボックスをポップアップし、友人を追加せずにオンラインで話す効果を達成する方法

-

sublime / vscodeショートカットHTMLコード生成の実装

-

HTMLページを縮小した後にスクロールバーを表示するサンプルコード

-

html のリストボックス、テキストフィールド、ファイルフィールドのコード例